You’ve seen the headlines. You’ve heard the outrage. The rise of non-consensual explicit deepfakes (AI-generated, hyper-realistic fakes) is one of the nastiest digital threats we face.

Deepfakes are basically a type of gender-based violence that messes with privacy and leaves serious, long-term damage.

And after all the big public messes (like the Taylor Swift deepfake fiasco), Google has finally said enough is enough.

They’ve rolled out a major, multi-pronged counterattack in their search engine, and it’s a big deal.

But is it enough to finally win the “whack-a-mole” game against online abuse?

The Threat is Real

Before diving into Google’s response, let’s get the scale of the problem.

Deepfakes are synthetic media created with sophisticated AI that can make it look like a person is doing or saying something they absolutely didn’t.

And here’s the kicker: this digital abuse disproportionately targets one group: Women!

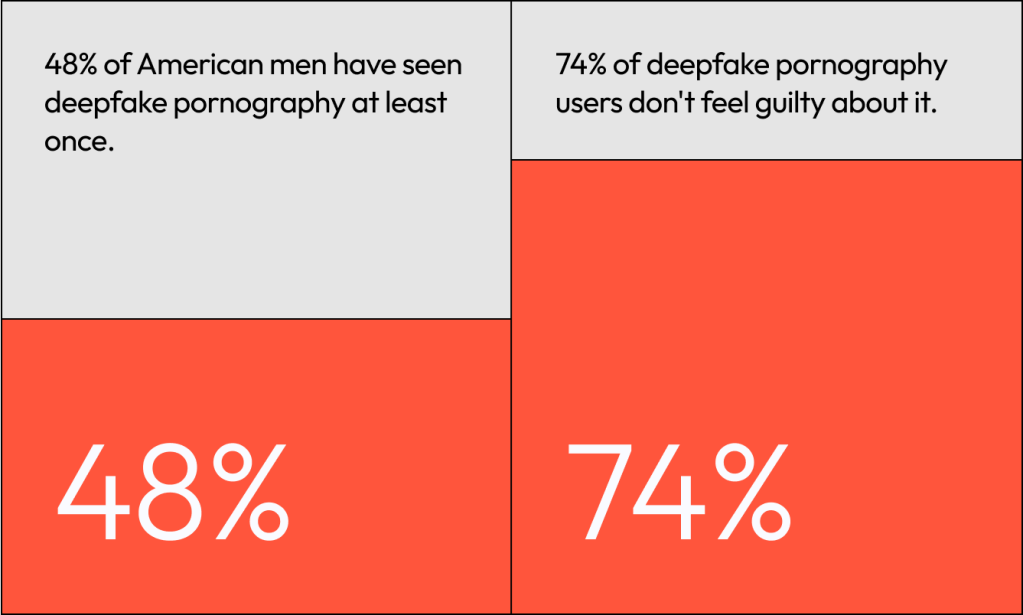

A report by Sensity AI found a shocking 96% of all deepfake videos online were non-consensual sexual deepfakes, and 99% of these targeted women.

This shows that the problem isn’t just some technical glitch but a serious violation of sexual privacy that is masssive and fast-moving.

Deepfake creation tools are now so cheap and easy to use, some services can make a video for as little as $2.99. That content is generated and re-uploaded faster than platforms can remove it…

It’s a never-ending cycle of abuse!

Google’s Response To The Threat Of Deepfake

Google’s old approach of just reacting to takedown requests was clearly failing. Their new strategy is an all-out, three-part effort that moves from simple content removal to a total algorithmic overhaul.

Instead of just waiting for users to report links, Google is proactively changing the way it ranks and filters content.

How are they doing this?

- Site Demotion: If a website is a repeat offender (constantly hosting or sharing explicit deepfake content) Google is lowering its overall search ranking. This acts like a severe penalty, burying the site deep into the search results where no one will find it.

- The E-E-A-T Quality Filter: Google’s search algorithm now leans harder on its quality framework: Experience, Expertise, Authoritativeness, and Trustworthiness.

- For sensitive searches (especially those involving a person’s name), the system is designed to prioritize reputable, non-explicit content, like news articles or educational sites, instead of harmful images. The goal is to change the search outcome from finding deepfakes to educating the user about the issue.

Google is claiming some success, reporting a reduction of over 70% in exposure to explicit image results for specific, targeted queries.

That’s a moderate win!

Google has also streamlined the process for victims to get their content removed.

Their updated policy explicitly lists “involuntary fake pornography” as content that will be removed upon request.

This is huge because once a removal request is approved, their systems will “scan the web to remove duplicate or similar content.”

This is their best shot at solving the “whack-a-mole” problem by preventing the same content from instantly popping back up with a different URL.

The detection technology has to keep up with the deepfake creators in what’s being called an “AI arms race.”

Google is collaborating with outside experts on advanced systems like UNITE, which is designed to spot forgeries beyond simple facial swaps.

This system looks at the full video frame, including backgrounds and motion patterns, to catch subtle inconsistencies that older detectors would miss.

Not All Victims are Equal

Google’s made some serious progress, but let’s be real: not everyone’s getting the same level of protection.

The reported 70% reduction seems to be most effective for high-profile, celebrity-related searches – the ones that garner huge media attention.

Surprisingly, deepfakes of “relatively less well-known social media influencers can still be found in the first three pages of Google search results.”

This suggests the new systems may not yet provide the same level of safety for the general public as they do for high-signal, celebrity-related cases.

Historically, Google was a major facilitator of this abuse—data from 2023 showed they were the “single largest driver” of web traffic to deepfake pornography sites—so while they’re taking decisive action now, the persistence of the problem for lesser-known individuals shows there’s a long road ahead.

Google isn’t alone, the industry response is a mess of fragmented approaches:

| Company | Strategy | Key Takeaway |

| Algorithmic intervention and site demotion in search. | Focuses on a technical solution within its core product to reduce visibility. | |

| Meta | Policy clarification via its independent Oversight Board. | Focuses on governance and policy to ensure clarity on content rules. |

| Microsoft | Legislative advocacy and public call for stronger regulation. | Pushes for legal and criminal penalties instead of just internal fixes. |

The absence of a solid strategy leads to a messy mix of protections around the world.

That’s why fresh legal ideas, like the proposed “Take It Down Act,” are so important.

This law would make it a must for platforms to set up a notice-and-takedown system for people affected.

They’d need to make “reasonable efforts to remove duplicates or reposts” within just 48 hours, and there would be some serious legal consequences if they don’t follow through.

Final Takeaway

Google has made significant, commendable progress. The move to proactive demotion and a streamlined, duplicate-removing process is a game-changer for victims.

However, this is not a problem that can be definitively “solved.”

The tech is always evolving, and the fact that lesser-known individuals are still exposed shows that the fight isn’t over.

A truly effective solution will require continued tech investment, greater transparency, industry-wide cooperation, and the support of strong, enforceable legal frameworks like the “Take It Down Act.”

What do you think is the single most important action tech companies should take next?

Let us know in the comments!